Data Engineering for PMs: A Must-Do Crash Course - PART 1

As I was gravitating toward being a Data PM, one of the first things that I did was get lost with what’s all the Data Engineering stuff! I was quite aware of all the data analytics and data science terms: EDA, Machine Learning, covariance, statistical significance, long/wide tables, etc. (Doesn’t ring a bell? We will get to these as well!) but not things like ETL, Data Warehouses, etc.

And, often, when I would try to figure out a potential product area, I would either get the “requirements” wrong or just forget to think through the “data engineering” challenges — which my engineering counterparts would not appreciate. So, over the years, I decided to build my knowledge up, and eventually even took a course in Data Engineering at UC Berkeley! So, through this edition of The Data PM Gazette, I’ll save you some time and get started to build out a crash course of main terms that you should be aware of, and, of course, we will do this in two parts!

PART 1: History of Data Engineering - you must know what problems led to some of the most well-known tools in the Data Engineering world.

PART 2: Some critical Data Engineering tools and processes that you should account for while thinking through product requirements.

What is Data Engineering?

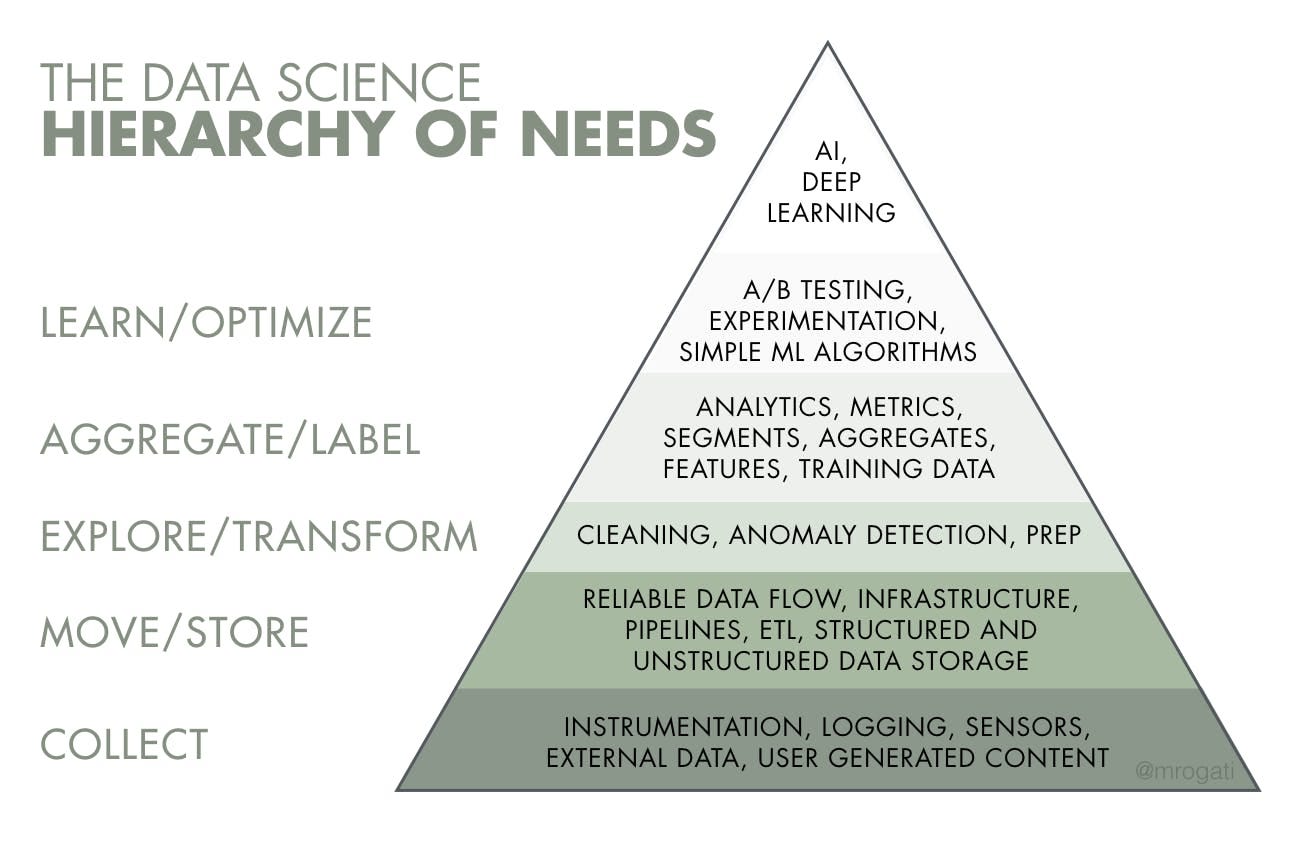

Data Engineering forms the bedrock of the “Data Science Hierarchy of Needs” i.e. it is foundational for building Data Products, and is a precursor for most Data Science and Machine Learning workflows!

As my professor defined it, “[Data Engineering is] a set of activities that include collecting, collating, extracting, moving, transforming, cleaning, integrating, organizing, representing, storing, and processing data.”

What’s important to note it is critical if an organization has:

A large amount of “messy” data i.e. can be structured or not.

Need to collaborate across teams on accessing and processing data.

Fuzzy end objectives but wants to build Data Science and Machine Learning workflows.

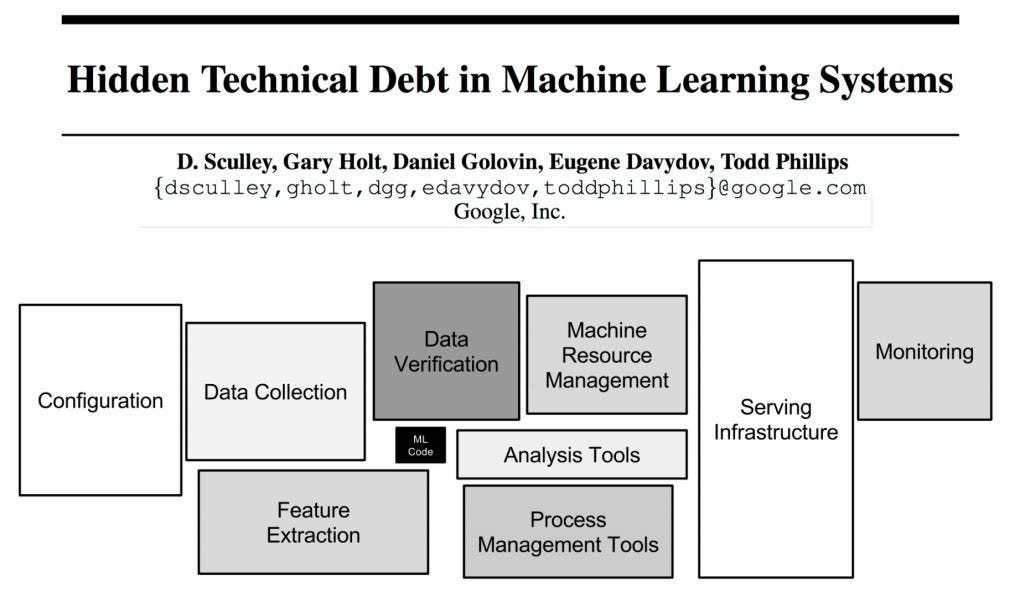

In fact, Data Engineering is quintessential to all analytics/data science/ML workflows, here’s how a few researchers at Google demonstrated its importance, each block around the “ML Code” (black) box is Data Engineering, showcasing how monumental Data Engineering needs are than writing the final ML code.

A brief history of Data Engineering (also the most terms you will come across)!

Before we dive into the Data Engineering tools and terminologies, it’s important to look back and empathize with the field of Data engineering so that you know the evolution of the field itself.

Early Days: Data Engineering traces its roots to the early days of computing when punch cards and magnetic tapes were used to store and process data. In the 1950s and 1960s, businesses began adopting mainframe computers to manage data and perform basic data processing tasks, where neither data nor its infrastructure was complex.

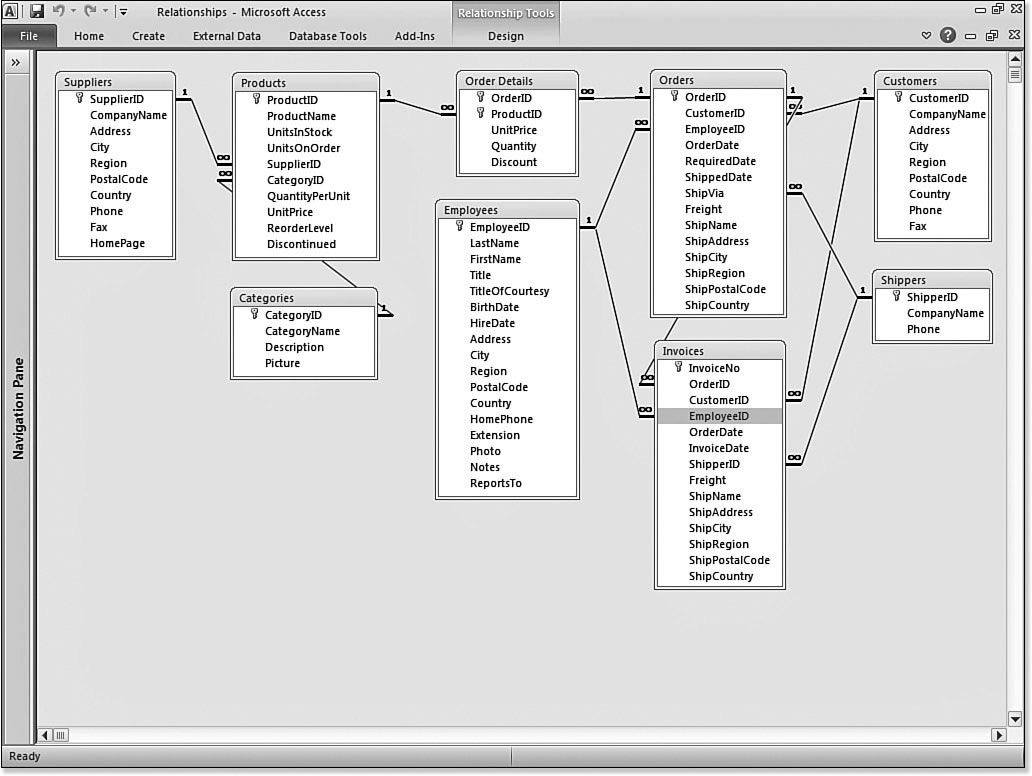

1970s: Database Systems and Relational Databases: In the 1970s, the development of database management systems (DBMS) and relational databases revolutionized data storage and retrieval. Edgar Codd's pioneering work on relational databases laid the foundation for a structured approach to data organization, and it also lead to the creation of the language (that we still use today!) to retrieve & query data - SQL.

1980-1990s: Data Warehousing: In the 1980s and 1990s, businesses started realizing the value of collecting and integrating data from various sources into a central repository known as a data warehouse. This allowed for more comprehensive data analysis and reporting purposes and organizing data better, and the concept originated at IBM!

1990-2000s: Internet and Big Data: With the rise of the Internet and the proliferation of digital devices in the late 1990s and early 2000s, the volume of data exploded exponentially. This led to the emergence of the term "Big Data," as traditional data processing technologies struggled to cope with the scale and complexity of the data being generated, and was mostly a problem for some enterprises such as Meta, Google, and Amazon.

1990s-2000s: ETL (Extract, Transform, Load): In the late 1990s and early 2000s, ETL tools became essential for extracting data from various sources, transforming it into a consistent format, and loading it into data warehouses. While the basic need of ETL has not changed, the processes and tools have evolved. The market now is flooded with ETL tools such as Fivetran, Rudderstack, Mage, Prefect, etc. But, the most notable contribution was when Airbnb open-sourced its internal ETL tool, Airflow, which then was widely adopted and gave rise to a whole new paradigm of complicated data systems.

2000s: NoSQL Databases: As Big Data applications grew in complexity, traditional relational databases faced limitations in terms of scalability and flexibility. NoSQL databases, like MongoDB, Cassandra, and others, emerged as alternatives that could handle unstructured and semi-structured data more effectively. Today, we are seeing a lot more types of databases coming in. From graph DBs to vector DBs to time series DBs, databases are now becoming more “purpose-driven.”

2000s: Distributed Computing and Hadoop: Around the mid-2000s, the concept of distributed computing gained popularity. Projects like Apache Hadoop, based on Google's MapReduce and distributed file system (HDFS) technologies, emerged to address the challenges of processing and storing massive amounts of data across clusters of commodity hardware.

2000s: Cloud Computing & Cloud Warehouses: The advancement of cloud computing in the mid-2000s revolutionized data engineering. Cloud platforms like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP) offered scalable, cost-effective solutions for storing and processing data, and came in RedShift, BigQuery, et al.

2010s: Data Streaming and Real-time Analytics: With the rise of IoT devices and real-time data, data engineering evolved to support data streaming and real-time analytics. Technologies like Apache Kafka, Beam, Apache Spark, and Flink became popular for ingesting, processing, and analyzing data in real-time.

2020s: Modern Data Warehouses and Data Lakes: In recent years, data warehouses (structured data) such as Snowflake and data lakes (structured and unstructured data) such as Databricks have gained prominence and are innovating to overtake traditional “cloud data warehouses”. And, not just the data warehouse, we are seeing the underlying technology also improve from the query engine to the way we store data, and the file system we use.

Want to learn more about the history, look at this resource from Airbyte.

Now & Beyond: We are seeing a revolution in data technologies, in data modeling practices, in organization structures that aid in helping teams become more “data agile”. Therefore, this history is literally the tip of the iceberg that is called the “modern data stack” in which Data Engineering tools play a massive role:

How this history helps a Data PM?

You can now relate to some of the technologies that your Data Engineers are using, and why they are using them. While it’s not your job to build “solutions” this helps you understand if your team is building to get just “batch” data but also “stream/real-time” data. If you are using a Data Warehouse vs a Data lake and what implications does that have? How are you doing ETL? Data Pipelines are not black boxes anymore! Feel better about Data Engineering? With PART 2, you can start to have some technical conversations too!

🔗 Data Links of the Week

Monte Carlo, a Data Observability platform, just announced “data product” monitoring. Love that “data products” is becoming an industry term, and the definition we have in this substack resounds well!

“A Decade in Data Engineering” by SeattleDataGuy captures the most important innovations of the last decade well, give it a read as a follow up!

LLMs!!!! Have to have a link. Here’s a great primer by Will Thompson, called “What we know about LLMs” that will bring you up to speed!

Hope this week brings you less LLM anxiety, some summer heat relief, and loads of comfort with the world of Data Engineering!

Singing off,

Richa,

Chief Data Obsessor, The Data PM Gazette!