Database schema changes: the key to holistic Data PM PRDs.

As a Data PM who might be coming from an MBA or non-core CS background, database schema migrations are probably one of the most essential things to learn. At least, it was for me. This edition took its time as I wanted to do justice to this topic. Database migrations could be your “ace” of spades when it comes to having detailed conversations with Engineering counterparts, or negotiating how you can squeeze some timelines.

But before we understand database migrations, we first need to understand database schemas.

What is Database Schema?

A database schema is essentially a blueprint or architecture of a database’s structure. It defines how data is organized and how the relationships among data are associated. It involves tables, views, and other elements. The schema specifies the tables that should be in a database, the columns they contain, along with their data types, and the relationships among tables.

Example of a Database Schema

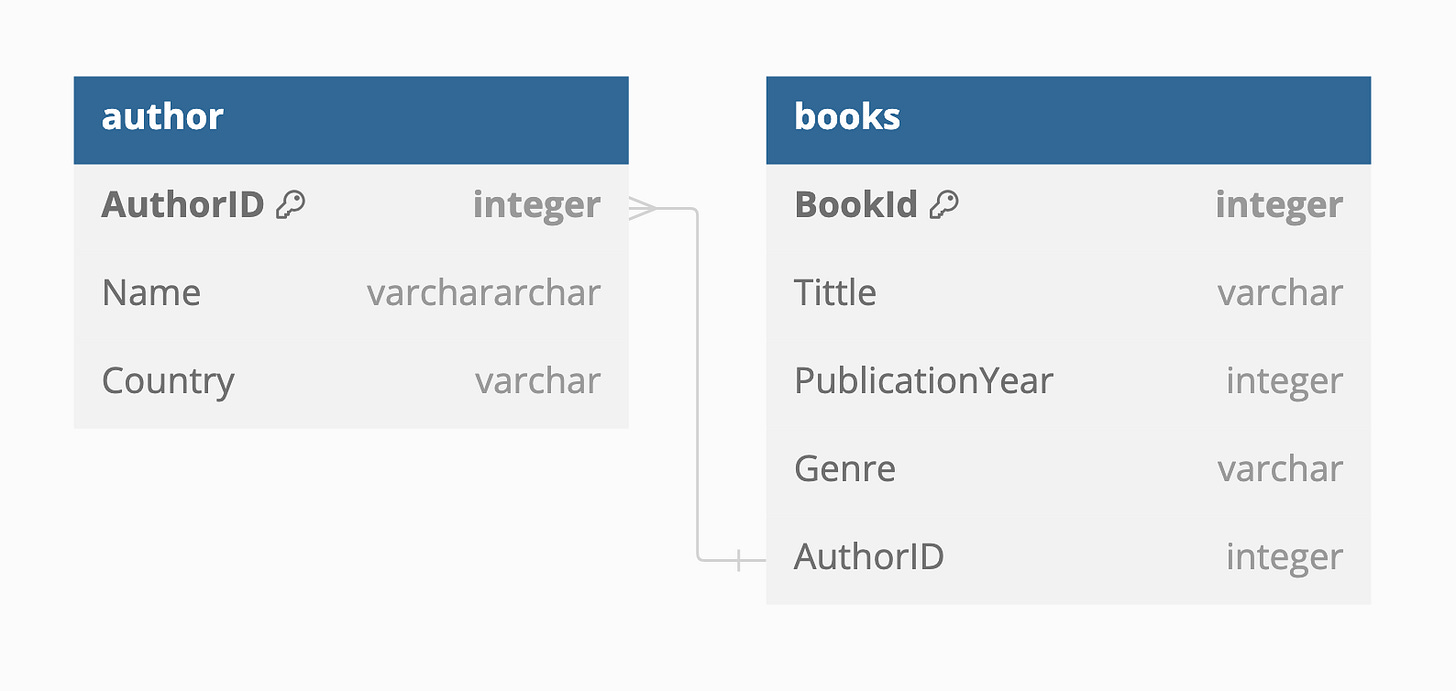

Here’s a simple database schema, courtesy of chatGPT. Imagine a simple database for a bookstore, with two primary entities: Books and Authors. The database schema for this example could be outlined as follows:

Authors Table

AuthorID(Primary Key, Integer, Not Null): A unique identifier for each author.Name(Varchar, Not Null): The name of the author.Country(Varchar, Null): The country of origin of the author.

Books Table

BookID(Primary Key, Integer, Not Null): A unique identifier for each book.Title(Varchar, Not Null): The title of the book.PublicationYear(Integer, Not Null): The year the book was published.Genre(Varchar, Null): The genre of the book.AuthorID(Foreign Key, Integer, Not Null): A reference to theAuthorIDinAuthorstable, indicating who wrote the book.

Relationships

The

Bookstable has a foreign key (AuthorID) that links to theAuthorstable. This represents a many-to-one relationship, as many books can be written by one author.

This schema defines the structure and constraints of the data, ensuring that books are always associated with an author and that the relevant information for both books and authors is stored according to the defined data types and constraints.

What are database migrations?

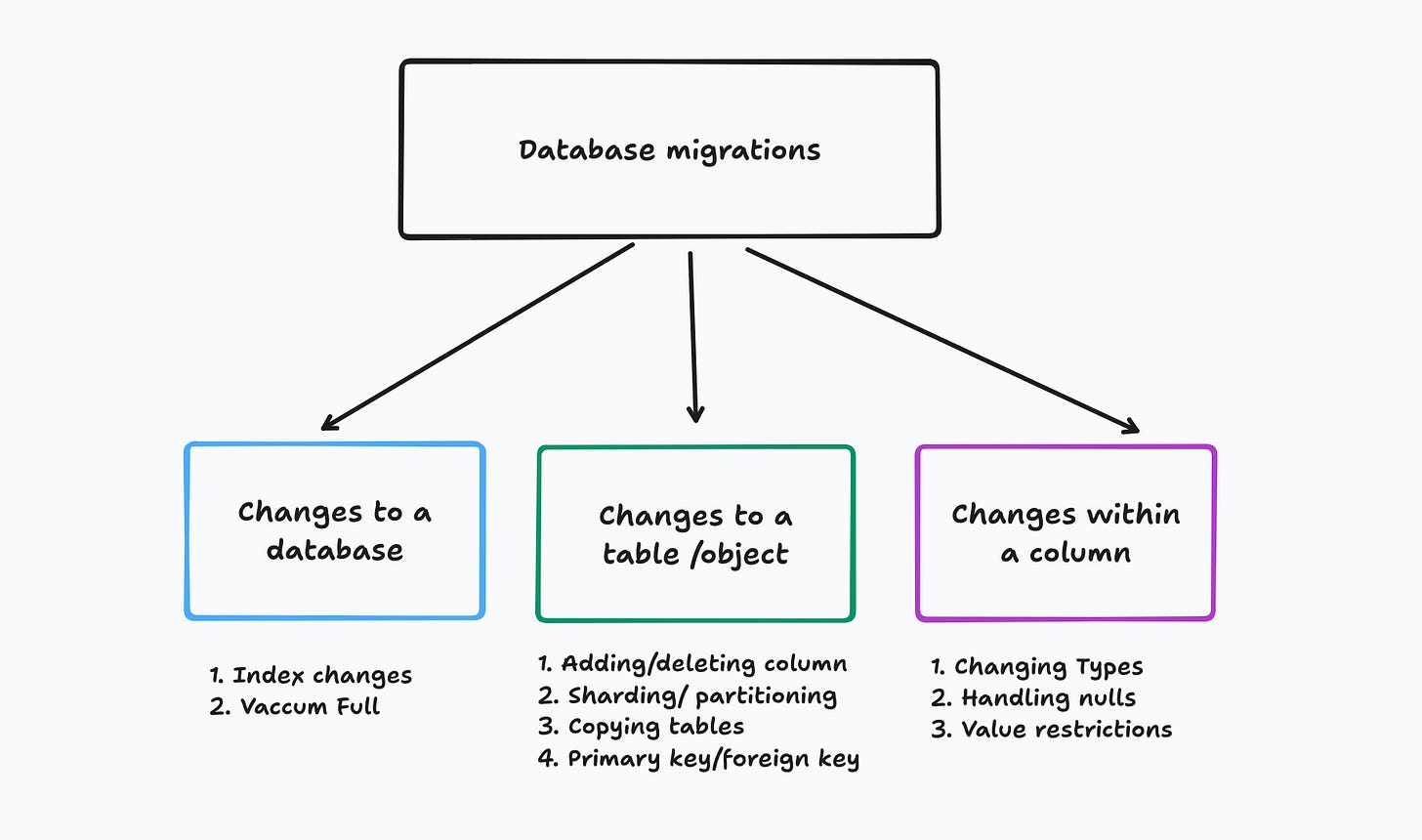

Database migrations refer to making changes or updates to a database schema over time. This process is essential for managing the evolution of a database’s structure in a controlled and systematic manner. Migrations can include various types of modifications, such as adding or dropping tables, creating or deleting columns, changing data types of columns, and updating indexes and constraints. Here’s an overview of what type of database migrations do teams often encounter:

Why should you care about it as a Data PM?

As a PM if you suggest a new feature, you will most likely cause schema migrations, and some schema migrations are not trivial. Legacy databases such as MySQL can have downtime for users sometimes. Therefore, sometimes developers create an exact copy of the previous database and replace the new database with a live database. But, you can imagine, one mistake in this migration can bring the application down.

New SQL databases such as Postgres, on the other hand, can alter schemas live but with only some limited capabilities. NoSQL databases such as MongoDB are schema-less i.e. they maintain objects rather than a relational structure, and so database changes can be simpler, but they can lead to data inconsistencies over the long run. And, application teams end up creating their own “rules around databases” to ensure consistent data.

Some newer age databases such as CockroachDB, a distributed relational DB, will handle live schema management without downtime but are low in adoption yet.

So, how can schema migration impact your product:

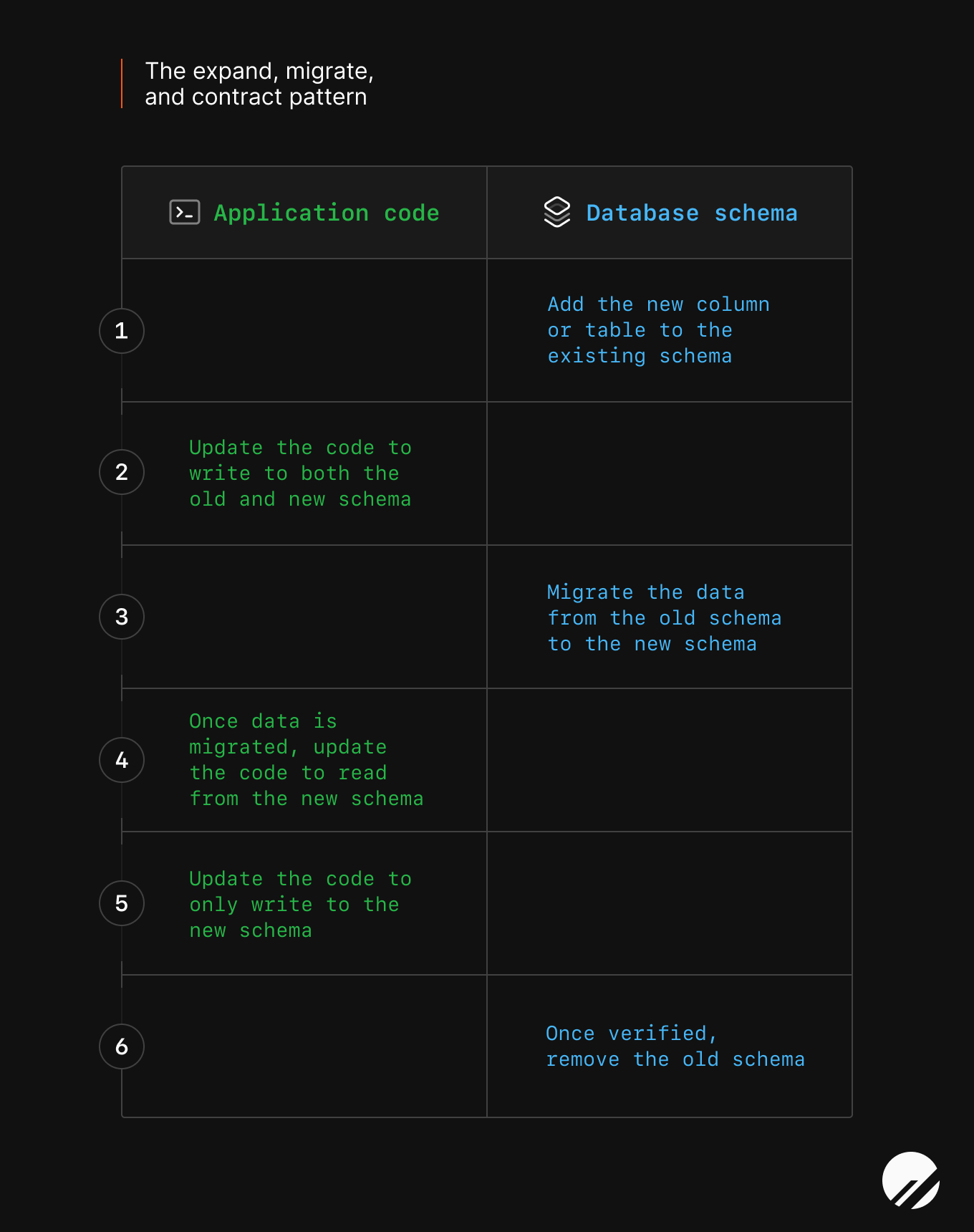

It can cause downtime if one step from the “expand, migrate, contract” pattern (see image below) goes missing.

A previous migration can make it hard to migrate schemas in the future. For example, you had previously deleted a column called “id” and you re-added a new column “id” but this semantically means something else, your application logic can fail if there is any logic left for the previous column “id”.

It can cause data discrepancies if not implemented correctly. Let’s say you have a production MongoDB, and you added a new key-value pair called “type: customer” but didn’t populate all objects for customers, your dataset will have consistencies.

If it’s not backward compatible, it will prevent feature rollback. Suppose you deleted a column for a new feature, but there is no duplicate table that has the data stored, and for some reason, you need to restore your data if you rollback that feature, you can’t do that if you didn’t think of backward compatibility ahead.

If the database query for migration is large, the application layer will become too slow to respond. Suppose a customer can add “unlimited” new fields to your table because of a feature, your table can grow out of proportion so quickly that the application layer will take seconds or even minutes to show data.

So, what are the best practices around schema migrations:

Most people use some sort of SQL database these days, given most application wants to support fast queries and that’s only possible with SQL databases such as Postgres or columnar stores such as Parquet files (still low in adoption in production systems). So, for this section, we will specifically discuss schema migration best practices to keep in mind for SQL databases (reference):

Given this, while writing your PRD, check whether the way you are defining is leading to any of the following:

Is your feature definition causing a downtime-worthy schema migration?

This would happen if you are causing too many alterations to the current schema or updating the index in a blocking way.

Adding an ability to modify schema on the fly without any bounds is a sure-shot recipe to bring your production system down.

Is your feature definition creating many columns?

See if that needs any back-filling of data if you create any new column, i.e. what happens to previous rows that don’t have any value.

Is this column a default i.e. needs data for sure? What value should be filled by default?

Or has any other unique constraint i.e. can’t be left empty (username) or can’t have negative values (phone numbers)

Because of your feature, would your data tables grow exponentially in future?

In that case, your engineers might have to apply new indexes so that application queries are performant.

Can your engineers think of applying a “Star Schema” instead?

Do you need a way of only querying partial data?

Suppose your database grows exponentially with each year for your customer. Can your engineers cut off the data at a 2-year time frame, etc? This might require them to apply “sharding” or “partitioning” and that can increase the time it takes to implement the feature.

Does your feature need to be backward compatible?

Can your feature be rolled back in a way that requires a database to retain a previous state? It is very important to call that out because this will heavily inform the design of the database.

A feature such as “maintaining version history” and reverting to a previous state can require backward compatibility, and so for such features, the frontend experience is standard and quick to build, but it’s the architecture upgrade for backward compatibility that requires effort.

Final Thoughts

Teams are doing database schema migrations all the time, so this is nothing new for backend engineers or scrum teams to handle. However, if you as a PM have empathy around different processes, it helps to understand the timelines that your engineering counterparts are giving, as well as include better requirements around rollbacks, defining the data retention policies, should you be creating features that explode databases or building features that require backward compatibility? It can’t be a feature that can come as an afterthought, your architects should be thinking about it in the early days.

Lastly, if you are transferring data between two sources regularly, having established well-defined data contracts around how you handle data, compatibility of sources, etc. is key. A good case study would be Delta Lake enforcement by Databricks.

What were your learnings around database migrations? Any feature that took more than a year to roll out? What has been a great case study of thinking of database migrations from the very start? I would love to know! Just respond to this substack :)

🔗 Links of the month

Haven’t started experimenting with LLMs? Know Databricks already? Here’s SeattleDataGuy’s guide on how you can engineer your data to work with LLMs in Databricks.

Matt Turck’s MAD landscape is out! What was surprising? What was not?

If you work on a product that handles tones of events, don’t miss out on timo's substack about event data handling

Cheers,

Richa

Your Chief Data Obsessor, The Data PM Gazette.