Hi everyone,

Welcome to the 10th edition of the Data PM Gazette! Yay! It’s been a 10-week strike of sharing my knowledge about Data Products. My goal this year is to write consistently for 50 weeks, and I will not miss the 10th!

So, here, comes another 3-part series. And, this time, I am focussing on “problem/customer discovery” — one of the top skills for a Data PM and the most valuable PM skill in general. In each part, we will explore a different type of Data Product, that I was fortunate to PM and learn how to do it better from.

Now, why 3? Each time I have approached customer discovery, I have realized depending on the product/stage/company, how you approach customer discovery varies greatly. And, therefore, you always get the doubt, have I covered enough ground? Have I looked at the right insights? Especially if you have started in a role where others have more context than you do. It gets worse if you try to PM in a startup as you will only have qualitative data that’s highly subjective. And, finally, if you lead a Platform product, you might get some quantitative data such as SLAs, uptimes, cost, etc., but you can’t make user-driven decisions on the basis of them. And, even speaking to the end customer won’t help you make product calls that are long-term.

Here’s what you can do at different stages to get signals on whether your product is working or not or where you need to focus. You can’t always measure everything; therefore, signals are what you can focus on. The more user-rooted your signals, the better your bets.

Through this series, let’s explore how customer discovery may change for different types of products in different buckets.

Case 1: A Pre-PMF to Growth product that had a UX problem

I worked on a Machine Learning product at one of my previous jobs. You can categorize it as a UX Product in the Pre-PMF → Growth stage. We had an amazing machine learning algorithm to get more “prescriptive” insights. It was a simple product, users can connect their data sources through our web app; run our algorithm against their dataset; and get insights that they won’t find in a standard BI tool.

Our challenge was that while we had a lot of good reception from the market and in the ticket size, we were not seeing growth in ‘Active Users’ and wanted to change that. So, here’s how I approach discovery.

My constraints

I was quite new to the company, and it was hard to pinpoint what was the exact challenge because I didn’t have the context of users.

It was hard to get in front of the customer all the time, as we had a small set of design partners we could contact, and they might be overworked.

Our product data was not something that we could use with confidence as it was a very small dataset so any drawn conclusions would be at the best guesses.

Don’t have endless resources to ship ideas, and see whether the customer likes it, or A/B test experiences because that would mean double resources.

And, finally, lots of people were trying to find the solution to the same problem, so everyone had a hypothesis, but really not a lot of data to back them.

So, I started to search for a problem discovery process that won’t be constrained because of the above reality.

My problem-discovery process

There are a lot of tools of the trade one can use when it comes to customer/problem discovery: one can do a speaking tour internally, one can do customer interviews, one can look at data and start making small tweaks to the product and wait for the numbers to reflect any changes, one can do A/B testing, one can also make prototypes and test them with design partners.

Our goal was clear: drive more engagement. But, to do that, we didn’t really know where the problem was. So, I banked upon the classic “design thinking approach” of Diverge and Converge, or the Double Diamond Method. It’s not a pretty complicated approach, it means, gathering insights from many sources (“Diverge”) and then defining what you are seeing is the actual problem (“Converge”), and then trying many solutions (“Diverge”) and then selecting the one that works (“Converge”).

Had the problem been more defined, I could have used a different problem-discovery approach

To discover the actual customer problem, I did the following:

Internal speaking tour to understand why internal folks believed that engagement was low. And, I found one theme: users didn’t get value from looking at our current visualization of the algorithm results. But, we didn’t know if the algorithm was producing insufficient results or if was it just the visualization.

I looked at FullStory’s screen recordings (the second best thing to speaking to users themselves) to see how people were struggling, and I found a lot of paper cuts in our experience. So, even if someone ran our algorithm (“showed intent”), they could not fully realize the value (“leaky bucket”).

I listened to many CSM calls, and Exec calls to see what was missing from the algorithm or the experience and tried to create a list of problems.

And, while it wasn’t as clear when I started, and my insights were all over. After having enough conversations and watching enough FullStory videos and Gong calls, I discovered two main things:

In our effort to cover more users (to drive engagement), we have stripped down the value for our core users. So, then, I made an effort to define our primary and secondary users and checked whether we were solving for our primary users well.

While our algorithm was good, the way we presented results was not super useful for our primary users to interpret results well. If we were to download and explore the same results in a spreadsheet/Tableau, the interpretation of our results would be much better.

So, I decided that we had to (a) solve for only our primary users (and it’s okay if secondary users aren’t happy), and (b) give them the experience that they expect to look at the results for the algorithm, and on discovering that people were preferring our experience, decided to rehaul the long-standing UX of our algorithm.

How did we arrive at a solution?

Once we defined that, we wanted to create a front-end experience to help our primary users explore our results meaningfully faster. Because experiences are subjective, we didn’t want to waste time developing something only to realize that what we developed is not something that our primary users will actually use, contrary to most people’s hypothesis. But, because we had done our homework in problem definition, we were confident.

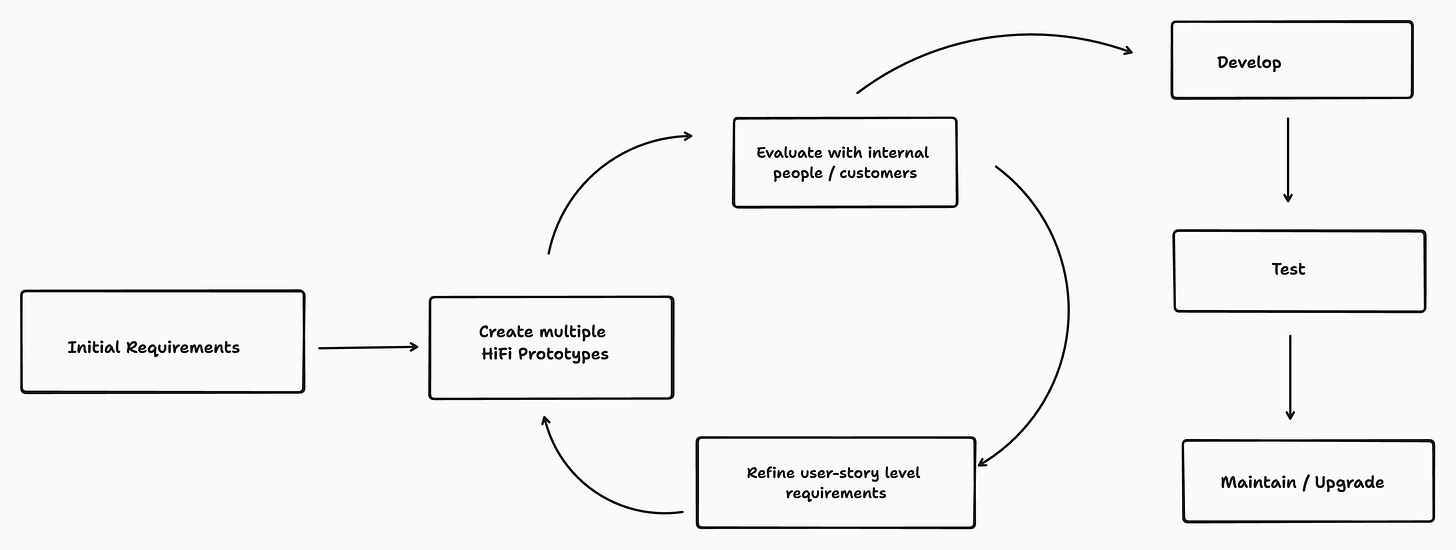

We created an experience that was pretty complex but very useful for our end users. We prototyped rigorously, devised multiple solutions at different stages, and gathered feedback on the prototype itself from internal stakeholders and our design partners to arrive at a version of the UX experience that our users showed confidence in and shipped it!

The solution discovery could have been different, but the subjective UX solutions require a different process for gaining confidence in a solution that works.

So, did it work?

I moved on from the company after this project was done 😞, so I don’t have access to data, but I have anecdotally heard that the user experience is very well received by the customers so far.

What did I do right?

Having a clear end goal in terms of “engagement” was really useful to drive a lot of decision-making and keep our discovery focussed.

My biggest strength in this process was listening in on as many qualitative signals as possible to form a viewpoint. It also made me the “voice” of the customer, as I would always have a FullStory link or a Gong link to back up my claim.

I wasn’t the only one doing discovery, so gathering signals from others’ calls and learning was also a big part. As well as, pushing back on certain views as they might be too biased based on a few interactions.

Spending time discovering exact granular problems with specific visualizations and UI elements was important, to actually guide the solution with confidence, and leaning on customers to know whether something makes sense.

For solutions, I gave designers a free hand to come up with solutions but created guiding principles to ensure it’s useful for the end user and we don’t repeat our mistakes again. They created solutions that I wouldn’t have thought of.

What did I not do right?

I didn’t document my customer discovery in a more structured manner. It was in many different documents, across tools, and I felt that while the core group knew, people outside wouldn’t have really understood the insights we got from our problem discovery.

I didn’t evangelize enough; I worked closely with designers to develop a solution but didn’t work with other internal stakeholders early on. Therefore, gaining confidence in what I was doing, took some time.

Initially, I jumped to problems soon enough, only to realize I needed to discover problems more deeply. So, the big learning was that if you discover a few signals in terms of your product problems, do dig deeper to make sure that you are uncovering the underlying problem. And how do you know if that is the underlying problem? If you keep hitting the same insight again and again, it probably is a good signal.

What can you learn from this example?

Based on this experience, I kind of created a 6-point guide for myself that I am sharing with you. Hope this helps with your next discovery.

Don’t be rigid about a process/framework, choose a framework that will help you uncover a problem & solution well, in a given situation.

Define the goal & have a north-star metric for the discovery.

Get multiple qualitative data points to fully form a particular hypothesis, and then test it with real users in interviews to arrive at a granular problem.

Ensure that you have defined the problem well, and with multiple examples.

Document all your leanrings well, and communicate the links between how you arrive at a particular insight so that others can validate your thinking.

Evangelize the problem with other PMs, Engineering Leads, and ask them to poke holes.

What mantras do you follow with your problem discovery work? What has worked? What didn’t work for you?

🔗 Links of the week

Timely as always, here’s a great curated guide from

about “Customer Discovery.”- analyzed all 139 YC startups around AI, and what amazing analysis of what are people building.

And,

’s beautiful take on the return of Data Platforms — if you read my last post and got inspired to be a Data Platform PM, this is for you!

Share your feedback, comments, and what you want to read more :)

Signing off,

Richa

Your Chief Data Obsessor.

Great post Richa! I loved that you spent most of time walking through a real-world problem and appreciate you unpacking the problem space.

I also liked the Prototype Customer Feedback Loop diagram - I will have to keep this handy as it does mirror the process for data product discovery that I have led for UX/dashboard features.