Part 2: Unique Challenges in Data Product Management

This is the second post of the 3-part series of The Data PM series, here’s the first post.

In the previous post, I explored how the role of the Data PM is different from that of a regular PM. So, the unique nature of this job comes with some unique challenges as well! This post is about knowing them and potentially addressing those in your jobs. For this post, I am assuming you are building a sizable data product i.e. either a data capability or data dashboards/reports builder, etc. Datasets and small features are pretty straightforward, these challenges are more relevant for bigger-scope jobs.

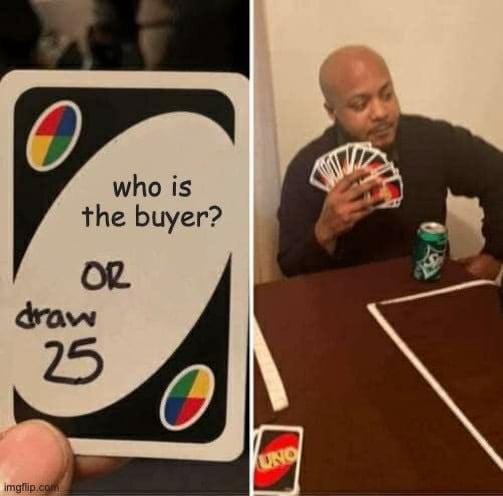

Challenge #1: Understanding the end consumer of the data is also your job.

In my experience building various data products, I realized that even if you are not building for the “end-user” (eg: you are building a data query API for other teams who will use the data to make a dashboard!), you will have to treat the end consumer as your consumer. You are mostly a B2B2C PM when it comes to data products! So you must know who is going to use this data, and what constraints you need to work with, who will make decisions based on the data. These questions are important aspects of crafting the requirements correctly and prioritizing accordingly. Plus, this exercise helps in improving the “explainability” and “relevance” of the product when you have to bake that in.

Potential Solution - A good way to overcome this is to have a deeper understanding of the end-users persona. Think about the following:

Technically Sound or Not - A data scientist would want different stats along an analysis than a business user.

UI-driven/Prefers Code. Can you throw in a code response or do you need a UI-driven solution?

End Goals. What do you wanna make at the end - dashboard, report, automate alerts, do machine learning, or all?

Depending on your persona, map out how they will interact with your product, and what kind of data/capabilities around data (if you are a platform) they need to use, have access to, and will make their lives easier. Use a Customer Journey Map to lay out these interactions!

Challenge #2: Constantly Educating Non-Technical Stakeholders about Data Complexity

Guess when did the job of a Data Scientist become sexy? 2012! (link). Just a decade back, yes! From the leaders in your company to your customers, not everyone knows about the challenges or benefits. So, unlike a traditional PM, you also have to constantly evangelize about the complexities of data science regularly within your org. Else, people keep wondering “Why is that model taking so much time?” especially when you can get ChatGPT to write a model too! 😛

Potential Solution - Take it up as your job to educate “internal stakeholders” and “end users”.

Internal - Web developers who are creating data products, might not realize the breadth of issues/complexities in terms of data update, storage, and processing, so educate them on how different use cases work, and what’s needed vs not. For example, the same data stack that works for rendering reports in applications, might not work for exploratory data analysis internally, or doing machine learning work. As a Data PM, explain these constraints. Educate on how “model training” cycles differ from web development cycles, the kind of maintenance that external pipelines can demand, etc.

End Users - Understand your end users and explain how a capability/model/data analysis work, what it does, and what it doesn’t do. The only way you can get your users to use your product is if they trust it, and trust in data comes with explainability and reliability.

In my experience, evangelization is a bigger responsibility for a Data PM, than even getting the feature right. So, what can you do?

It starts with the brief you write. Call out dependencies. Call out shortcomings, and make the PRD so holistic that even a non-data person can understand what’s required and why is that complex to build.

Secondly, often share related articles/technology stack, etc. to keep the conversation going.

Do internal launches! Do Product Review Meetings, Product Testing parties, and Product Launches at All Hands/Demos.

And, for the end users, write detailed blogposts, make explainer videos, and build a one-pager that explains your product and how it differentiates well.

Explainability is a subjective UX problem, but you can overcome it. Put in those tooltips, just don’t overdo it. Explain your data sources and model well, you can always add a “view more” CTA. For more tips, Yael Gavish have you covered here.

Challenge #3: You can not take the classic MVP/Design sprint approach for data products!

Building a data product is a little different. With simpler products, proofs of concepts can be validated using Figma prototypes. You can tell whether people will use/not use a given new feature or not. But because of the added dimension of data, you can’t just do a design prototype, you will have to run the model on some sample data or show some real numbers, and analysis to validate whether users find it useful. With a dummy prototype, users might find it useful but say something else when they see real data. Although you can still do a dummy prototype to validate the UI.

Potential Solution - So, what can you do? You can stage execution. Rather than polishing your prototype, or waiting on concrete signals. Build something bad but small, launch it, and then see how users react. You don’t have to automate everything on day 1. Don’t shoot for the moon in the first iteration, you may never launch. This famous HBR article gives good tenets to keep your Eng velocity high and stage execution efficiently:

Lightweight model/analysis — make simpler models first, so that they are easier to debug and ship.

Reduce data source dependency — use dummy data or create one-time dumps for data sources before actually testing the models for production data.

Narrow the domain i.e. don’t solve for all personas, or all use cases all at once — this can vastly reduce the edge cases and use cases that you begin to solve.

Hand-curate — you don’t need to have a 100% automated system/model/analysis. Can’t build ingestion? Do manual uploads! Validation of the end product matters, not the short-term pain.

Challenge #4: Evaluating and testing data analytics products is difficult.

Data Products are hard to evaluate as products because it is hard to pinpoint whether it’s the model/analysis or the user experience created around the model that’s the issue.

With Insights data products, they might not have the perfect insights, but as the users start to use the analysis more, feedback will only make it better. For ML models, the accuracy grows only over time. It gets even trickier for Platform capabilities because you might not always cover all use cases, so what might work now, might be insufficient 6 months later. Therefore, it’s not straightforward how to best evaluate and test such a product.

Potential Solution - Pre-define the metrics for the usage, accuracy of the models, as well as the failure rates at the start to define success. Regularly audit what you build and study some qualitative behaviors of the users to understand whether the model, the platform capability, or the data report are solving something. No one likes building stuff that doesn’t get used.

Challenge #5: Privacy, security, and governance are your first three requirement points, always.

With the added dimension of data, comes another aspect of “privacy” and “governance” that you as a PM can’t ignore. As you write up those requirements, think about data governance within various customers, whether you are dealing with personally identifiable information (PII), do you need to give download access, and how to manage data over time. There are a lot of data privacy and security regulations that each platform should follow and for a Data PM, you should bake this in your PRD!

Let’s say you are launching a new API that can query person-level data but forget to mark “auth” as a requirement. And, a developer who shouldn’t have access to it, actually uses it and downloads PII. You will meet the feature requirement, but will probably lose all credibility.

Potential Solution - Always think about the following while defining a new feature:

Who can access it? Can everyone have full access/partial access? Who can view/edit/delete this?

Am I dealing with Personally Identifiable Information?

What standards does my company adhere to? Just have a basic sense around them. What regulations are standard for my industry? Do I have customers in Europe, must follow GDPR!

Do my customers have any governance structure? How can we follow them?

Also, no harm in running your PRD with a security expert internally. CISOs/lawyers can help you!

🔗 Data Links of the Week

This is a must-read on “Modern Data Stack” if you haven’t heard that term. This blog will get you up to speed. It took me 1 hour to find this, do read it!

This LinkedIn post by Benjamin Rojan is A+ when it comes to catching up on current affairs in Data Engineering without getting too technical about it!

Meta just launched an open-source LLM, Llama-2, learn about it here, it’s supposed to be a ChatGPT competitor, but open source. Databricks, Snowflake, Azure, etc. have support for it already.

What do you think of the challenges above? Have you faced any? Do you find these links useful? Comment below!

Signing off,

Richa,

Chief Data Obssessor at The Data PM Gazette!