Writing a great Gen AI PRD: PART 3 - BigQuery AI co-pilot

A sample AI copilot feature PRD and how to think about each section well.

Welcome back, Data PMs! I took a break from writing, and couldn’t publish the September edition, however, I am back in the series, with a real-life example.

In our first two posts of the series, we explored the key considerations for developing AI-powered features and the essential metrics to measure their success. In this edition, I am putting all that knowledge into practice by walking through the creation of a Product Requirements Document (PRD) for a game-changing AI feature: the BigQuery AI Copilot. Read along to understand how to do the following:

✅ How to come up with the initial set of features? Considerations based on user journey & workflow improvement that happens based on the feature. (See: North Star Metric & Functional Requirements)

✅ Sequence features - what makes MVP and what can be released later. (See: Roadmap)

✅ Doing ROI calculation and justify the same to leadership (See: FAQs)

✅ Pricing Strategy and how to monetize the feature (See: GTM Plan)

Heads up: this is a VERY LONG post! Only because I wrote a whole PRD in the post, bookmark this to refer to when you write a PRD. Here’s Phil telling you, you got this!

Cheers,

Richa

Your Chief Data Obsessor, The Data PM Gazette

Case in Product: AI Copilot for BigQuery

We propose developing an AI copilot deeply integrated into Google BigQuery, designed to enhance the productivity and effectiveness of data scientists and analysts. This tool will leverage Gemini's advanced language understanding capabilities to provide contextual assistance, automate routine tasks, and suggest optimizations within the BigQuery interface.

Fun fact, at the time of starting the research for this post, there wasn’t a proper AI copilot, just a simple Generate data insights in Bigquery (reference). But, now, Google has released a full-fledged Gemini for Big Query feature:

As we dive into this PRD, we'll see how the principles we've discussed come to life in a real-world scenario. We'll cover everything from the product's core functionality to its market potential and go-to-market strategy. Let's get started!

Sample AI PRD: Co-pilot for AI Teams

For the Sample AI PRD, I am going to give commentary in the green boxes (like below) and the actual content as part of the main body. Follow along!

Setting a goal and vision for what we want this copilot to do, helps anchor our teams on why they should be working on this feature.

Note: how Goal is more oriented into "What will happen in future?" while the vision states "how we will achieve that future".

⚽️ Goal

To create an AI-powered assistant that enhances the capabilities of both data analysts and data scientists within BigQuery. The Copilot will transform BigQuery into an intelligent workspace where human expertise and AI capabilities combine to unlock unprecedented value from data.

🔮 Vision

The BigQuery AI Copilot will be a data analysis augmentor, democratizing advanced data science techniques while accelerating time to insight. It will adapt to individual user’s needs, proactively identify data patterns, and seamlessly integrate with the Google Cloud ecosystem. As it evolves, the copilot will continuously learn from user interactions, expanding its capabilities while maintaining the highest standards of ethical AI use.

🎯 North Star Metric

Time to Insight: The average time it takes for a data scientist to go from raw data to actionable insights using BigQuery with the AI copilot.

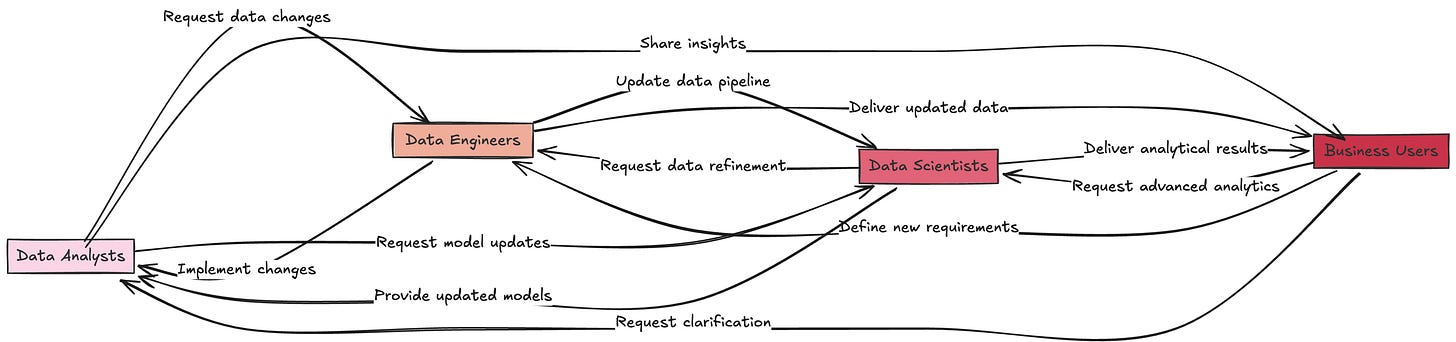

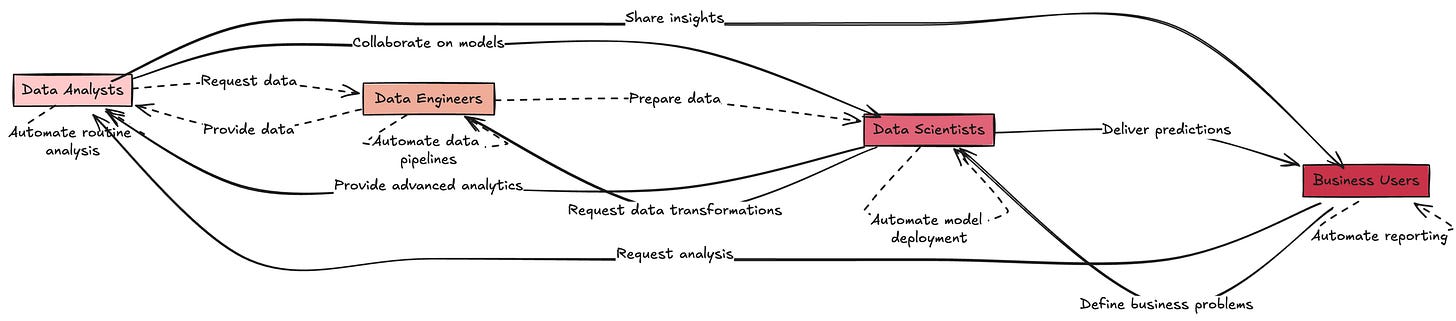

The reason why this is the north-star metric is that typically the back-and-forth that happens between business users, analysts, data engineers, and data scientists, to produce an insightful analysis, can be significantly reduced.

This is counterintuitive to the “time spent in the product” metric, but useful for increasing the overall NPS (Net Promoter Score).

This metric encapsulates the core value proposition of our AI copilot - enabling data scientists to derive insights faster and more efficiently. It reflects improvements in query optimization, automated EDA, and smart suggestions, directly tied to user productivity and satisfaction.

User Journey

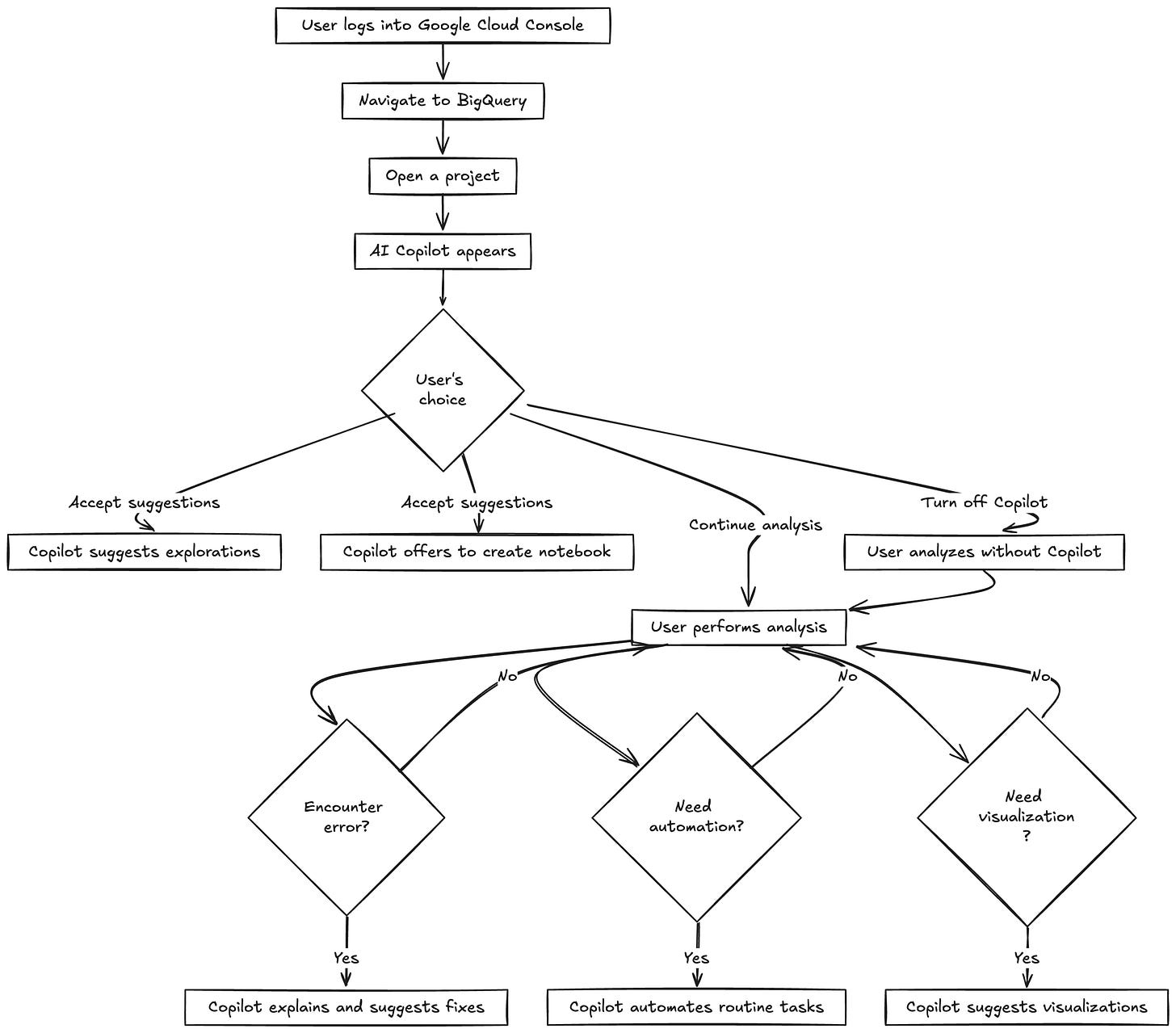

Overall, the user can interact with the copilot across the entire flow of analysis, here’s all the steps at which the user can interact with the copilot in a Big Query project.

🧰 Functional Requirements

The Co-pilot will only be a worthwhile investment if it helps generate enough value for the users so that (a) even users who used to get stuck with SQL queries can use Big Query and (b) users who were doing analysis, but not very thorough, can improve the quality of their work. The user should feel a significant jump in user experience, and only then, can the copilot help improve either (a) retention of users or (b) increasing Monthly Active users in the long run. This won’t necessarily increase the “time spent in product per user” but it will reduce the “time to insight” and, therefore, increase user satisfaction.

If AI Co-pilot would help, it will shortcircuit many parts of the process mentioned above (see dashed lines):

Therefore, some of the features of the copilot are as follows:

Suggested Features

Here are the must-have features to test the hypothesis of the investment, and then further make it monetizable. We propose releasing some features in MVP in the first six months, followed by rapid additions over the next 2 years to make the feature monetizable.

The “What” and “Why” structure for each feature helps the reader to not mistake the feature for something it’s not, and also, explains why a given feature is important to the user. Also, note the explanation on top of the diagram demonstrates the “goal of the product” and how each feature relates to it, giving a 30,000 ft. picture of the product.

🛣️ Roadmap

Here’s a more detailed phased approach to developing and releasing the BigQuery AI copilot, aligning with the features described in the Functional Requirements section:

As the feature dev progresses, we will re-prioritize features, as well as how each of the modalities should work.

For this section, see how incrementally each feature is growing , as well as how we are first targeting one persona, and then, adding personas, so that it helps dev teams build one part of the feature completely, and then focus on related, but different use cases.

Also, even a sub-feature such as “Context aware Query Suggestions” are broken into 3 releases, so that it can help us test our hypothesis that the feature drives user productivity, and make BigQuery more intuitive to use from the first release itself.

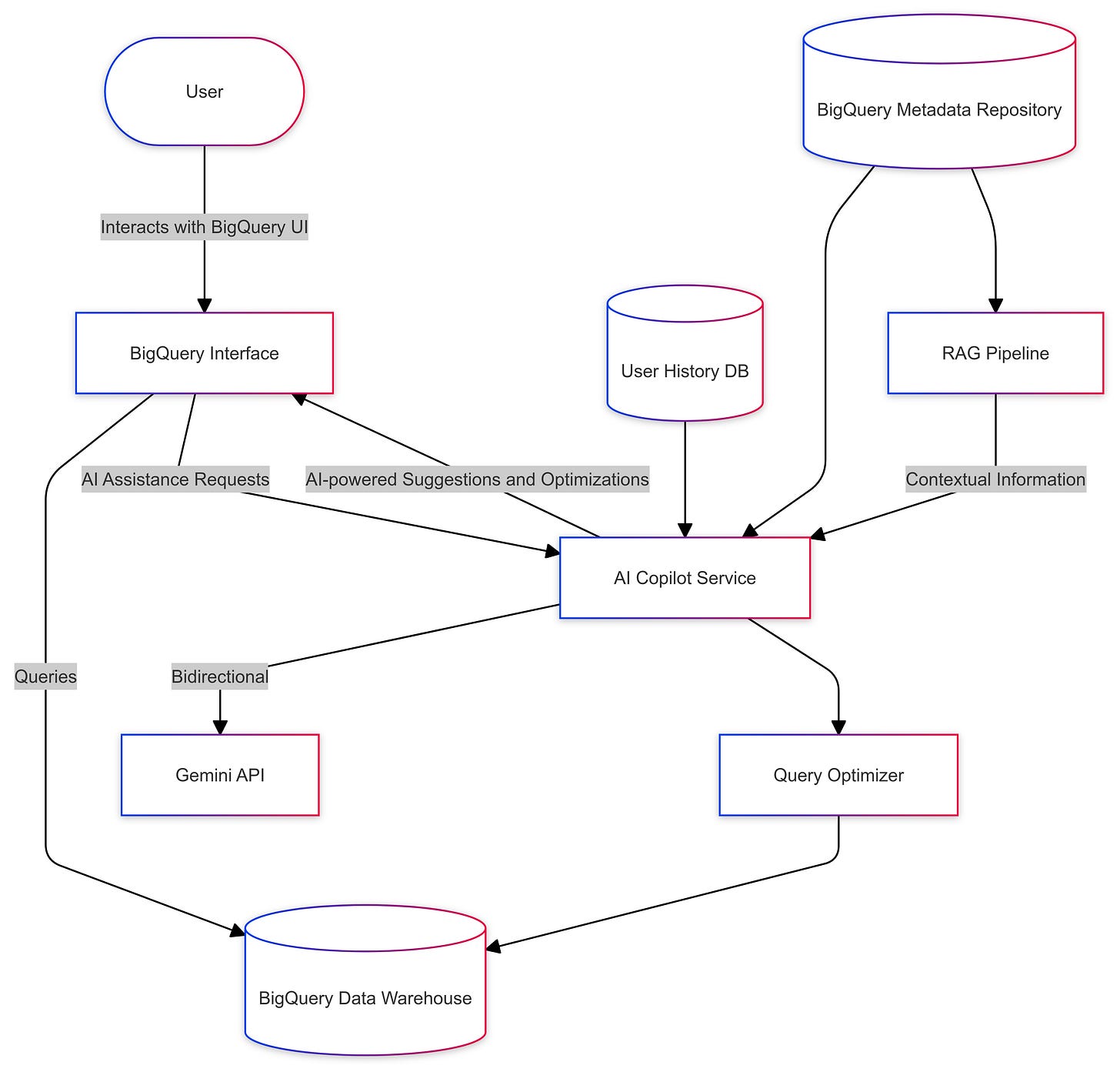

🏗 High-Level Architecture

As discussed with Architects, a high-level solution would involve the following:

BigQuery UI Layer: Existing BigQuery interface with embedded AI copilot features

Copilot Service: A new microservice that handles AI logic and communicates with BigQuery

Gemini API Integration: For natural language processing and generation

BigQuery Metadata Service: Provides context about user's data and query history

Suggestion Cache: Stores and retrieves common suggestions for faster response

Feedback Loop System: Collects user interactions to improve AI suggestions over time

Key Considerations:

Ensure low-latency integration with BigQuery UI for real-time assistance

Implement robust security measures to protect user data and queries

Design for scalability to handle increasing user load and data volumes

This section highlights high-level tech design in consultation with architects, which can be helpful for the engineering team to scope out the feature and envision how different services will come together.

👩🏻💼 GTM Plan

This section covers how we are going to take the feature to market. This section talks about pricing and feature releases as well as customers, sales, and marketing enablement plans.

Pricing and Feature Release Structure

Basic Tier (Free) - Available to all BigQuery users

Context-aware query suggestions

Simple query optimization

Simple Natural Language to SQL

Advanced Tier (Paid) - Priced at 10-15% of customer's BigQuery spend

Automated Exploratory Data Analysis (EDA)

Cross-dataset insights

Advanced Natural Language to SQL

Error Handling for Queries

Feature Accessibility Rollout

Soft Launch (Months 1-2)

The basic tier is turned on by default for internal users and 100 users.

Collect feedback and refine

Beta Program (Months 3-4)

Expand the basic tier to 20% of users.

Invite select customers to test the advanced tier.

General Availability (Month 5)

The basic tier is available to all users, by default.

The advanced tier opened for paid subscriptions

Full Integration (Months 6+)

Gradual release of additional advanced features

Continuous improvement based on usage data

Marketing Channels

Google Cloud's official blog and social media channels

Dedicated email campaigns to BigQuery users

Google Cloud Next and other major tech conferences

Webinars and online workshops

Paid digital advertising (search, display, social)

Content marketing (whitepapers, case studies, tutorials)

Sales Enablement

Comprehensive training for Google Cloud sales teams

Creation of demo environments and scripts

Development of ROI calculator and comparison tools

Resources for data scientists, engineers, and analysts to adopt the feature

Partnerships

Co-marketing with major cloud consulting partners

Educational partnerships with online learning platforms

GTM Success Metrics

Adoption rate: 30% of users engaging with the basic tier in 6 months

Conversion: 30% of users who adopted upgrading to advanced tier in 12 months

Revenue: 3% increase in BigQuery revenue attributed to AI copilot in the first year

Building a feature is not enough, landing it in the hands of the users and a solid GTM plan help leaders visualize the same, and build confidence in your product plan.

🙋🏻FAQs

Q: What is the estimated cost of building the BigQuery AI copilot feature?

A: We estimate $15-20 million over 18 months for development and launch. This figure accounts for the complex integration with Gemini API, extensive engineering resources needed for a phased rollout, and essential marketing efforts. The projected market share increase and long-term revenue growth potential justify the investment. See Appendix 1.

Q: Is pricing the feature the right strategy, and how does it align with our overall product pricing model?

A: Yes, the tiered pricing strategy is optimal. The free Basic Tier will drive adoption and showcase value, while the Advanced Tier (priced at 10-15% of BigQuery spend) ensures revenue scales with usage. This approach aligns with our freemium model as well as that used by industry standards (GitHub co-pilot), balancing accessibility for new users with the monetization of high-value features, potentially boosting overall BigQuery engagement and revenue.

Interestingly, Gemini for BigQuery, the actual feature had a similar approach too. See the Gemini For BigQuery pricing here.

Q: How will this feature expand the Serviceable Addressable Market (SAM) and Total Addressable Market (TAM)?

A: The AI copilot is projected to expand our SAM from $4.3 billion to $5.5 billion by 2026, increasing market share from 11% to 14%. While not directly expanding TAM, the feature's ability to simplify complex data tasks may attract new users to cloud data warehousing, indirectly growing the overall market. This growth is rooted in the copilot's potential to democratize data science and accelerate insights for businesses of all sizes. See Appendix 2.

Q: How does the BigQuery AI copilot differentiate from existing SQL assistants or AI-powered query tools?

A: The BigQuery AI copilot stands out through its deep Google Cloud integration and Gemini's advanced AI capabilities. It differentiates from competitors such as:

Amazon Redshift's Query Editor: Our copilot offers more advanced natural language processing and cross-dataset insights.

Microsoft's Azure Data Studio AI: BigQuery's copilot provides deeper integration with the entire analytics workflow, from EDA to visualization suggestions.

Snowflake's Snowsight: Our solution offers more comprehensive query optimization and error-handling capabilities.

The focus on reducing time-to-insight and adaptive learning provides a unique value proposition, addressing core challenges of data analysis efficiency and accessibility across the entire data workflow.

Q: What are the key risks of developing and launching the BigQuery AI copilot?

A: Primary risks include AI accuracy issues, data privacy concerns, user adoption challenges, and competitive responses. Technical integration complexities and meeting "Time to Insight" expectations also pose significant challenges. These risks stem from the innovative nature of the product and the sensitive data it handles. A robust risk mitigation plan, focusing on rigorous testing, strong security measures, and clear user communication, will be crucial for successful implementation.

FAQs are helpful to answer obvious unanswered questions and also keep a log of future questions that others’ might have. Always include the most obvious ones. Supplement that with any calculations that you might have done using appendix. Here are some backpocket calculations for you.

Appendix 1 - Cost of Feature Development (3-Year Projection)

Here's a breakdown of the estimated costs for developing and maintaining the BigQuery AI copilot over three years:

a) Personnel Costs:

5 ML Engineers: $200,000/year each

3 Full-Stack Developers: $180,000/year each

2 UX Designers: $150,000/year each

1 Product Manager: $180,000/year

2 QA Engineers: $140,000/year each Annual Total: $2,190,000 3-Year Total: $6,570,000

b) Infrastructure and Cloud Resources:

Development and testing environments: $500,000/year

Production infrastructure: $1,500,000/year (scaling up over time) 3-Year Total: $6,000,000

c) AI Model Training and Inference:

Initial model training: $1,000,000 (Year 1)

Ongoing training and inference: $750,000/year (Years 2 and 3) 3-Year Total: $2,500,000

d) Licensing and Third-party Tools:

AI libraries and tools: $200,000/year

Development and collaboration tools: $100,000/year 3-Year Total: $900,000

e) User Research and Testing:

Ongoing user studies: $150,000/year 3-Year Total: $450,000

f) Marketing and Launch:

Initial launch campaign: $500,000 (Year 1)

Ongoing marketing: $250,000/year (Years 2 and 3) 3-Year Total: $1,000,000

g) Contingency (10% of total): 3-Year Total: $1,742,000

Total Estimated Cost (3 Years): $19,162,000

Key Considerations:

Costs are expected to be front-loaded, with higher expenses in the first year for initial development and launch.

We anticipate economies of scale and efficiency gains in years 2 and 3, potentially offsetting increased usage costs.

This estimate assumes internal development. Costs could vary if we leverage existing Google AI resources or need to scale more aggressively.

Return on Investment (ROI) Projection:

Based on our market growth estimates (increasing market share from 11% to 13-14%), we project additional revenue of $800 million to $1.2 billion by year 3.

This represents a potential ROI of 40x to 60x the development cost over three years.

Appendix 2: Market Rationale

The global cloud data warehouse market is projected to reach $39.1 billion by 2026, growing at a CAGR of 19.6%. With the AI copilot, we aim to increase BigQuery's market share from 11% to 20% by 2026, potentially generating $7.8 billion in revenue.

This feature will create significant synergies across Google Cloud:

Looker: 40% increased adoption among BigQuery users

Vertex AI: 35% usage increase for advanced ML workflows

Google Workspace: 15% growth in enterprise adoptions

Competitively, the AI copilot will:

Challenge Databricks in data science and ML workflows

Enhance BigQuery's ease of use to compete with Snowflake

Outpace Amazon Redshift's growth by leveraging superior AI capabilities

Strategically, this initiative:

Positions Google Cloud as a leader in AI-powered data analytics

Strengthens the overall Google Cloud ecosystem

Attracts data scientists and analysts, influencing broader cloud decisions

Serves as a foundation for future AI-driven innovation

GOLD