Writing a great PRD for Gen AI feature: Part 1

As most PMs are being asked to write PRDs for new AI features, how do you make sure that you are writing a great PRD? We discuss the same in this series.

Recently, I came across ChatPRD by

and it’s a great tool for writing PRDs using AI! But.. I wondered if there exists a well-rounded PRD for AI features. What if there exists a template for a PRD for AI features? How should I define the right requirements, so that Engineering can get started on the feature quickly? Can there be a template? Well, technically, Product School has a template, but to use something like this, you might have to understand the landscape of the Gen AI features - what are the different scenarios to use Gen AI, and how to best define - depending on how deep PRDs are at your company.As more and more companies are trying to work on incorporating LLMs in their feature development, PMs are asked to determine how to use LLMs in the tech stack and build industry-relevant features. So, over this post and the next , I will go over how to define the requirements of an AI (LLM-based) feature. Excited? Let’s get started.

When to use Gen AI?

While the internet might want you to believe that Gen AI might solve all of the world’s problems, it is not, especially if you are working in software. Not all use cases require AI to be deployed, and even if AI supports a use case, you do not always force that solution.

Here are the different use cases in which you can think of building a Gen AI feature that can be production grade i.e. launched to all your users with confidence:

These can be further summarized into 3-4 categories of use cases:

It’s important to understand what’s the category for your use case and whether Gen AI fits as a solution. Suppose you are tasked to build a chatbot, now you might think that Gen AI can solve that use case, but maybe the chatbot your application needs is quite simple, and therefore, all you need is 3-4 questions that can collect data. For such a use case, building a chatbot using Gen AI is an overkill, and might not be the right solution to the user problem you are solving.

So, it’s important to note the following in context of Gen AI use cases:

(a) do you have a use case that fits one of the above categories i.e. is it even possible to productionalize it?

(b) do you really need a Gen AI solution? Could you use a simpler algorithm? Do you have the bandwidth to invest in LLM based use cases?

Another way to look at the Gen AI use cases is by using the below diagram from

that describes the LLM landscape. The way to read this info is to see, given the kind of LLM technologies to go left to right and see fundamental LLMs (Zone 1) were built to do these activities (Zone 2), and is currently being taken to market using the Gen AI technologies today (Zone 3 & 4), with support of Zone 5 artifacts, to solve for Zone 6 use cases.Once you understand where your use cases sit, the next thing to understand is “how is Engineering going to build the solution” i.e. how do companies put LLM in production?

Production implementations of LLMs

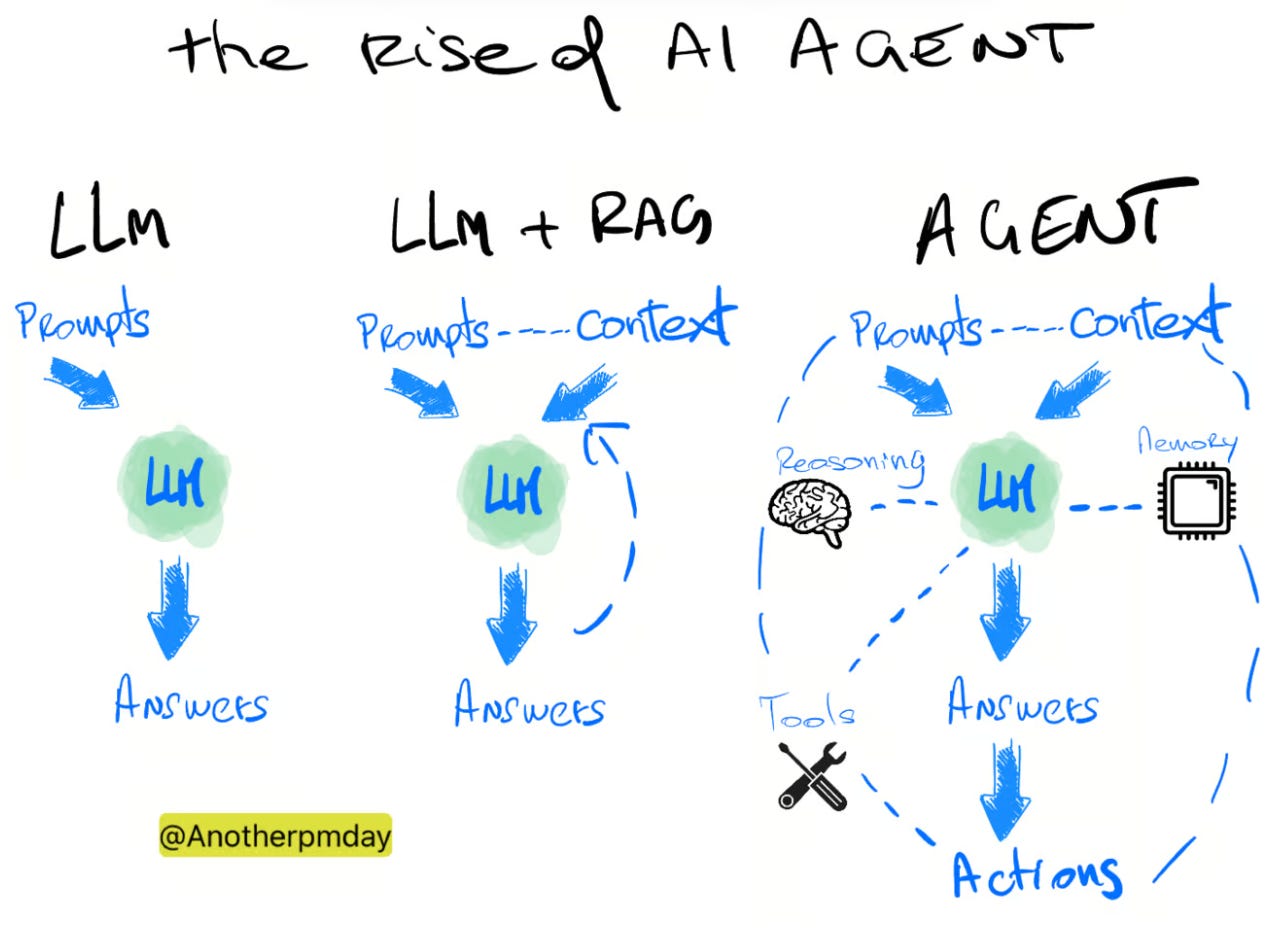

At a very high level, there are four types of LLM solutions in production these days: prompt engineering based, RAG or Retrieval Augmented Generation, fine-tuned model, and fully-trained model, each of these increases in complexity, as well as the cost of building a solution around them increases as well with a reward for higher quality output.

While I can explain the four approaches in detail right now, that’s a different blog post that Amit Paka (Founder, of Fiddler AI) has written here.

Beyond these four approaches, a new way to productionalize LLMs has emerged as Gen AI agents. An agent is a repetitive task-driven workflow that uses the power of LLMs to automate some tasks that also include decision-making and actions beyond just retrieving information. AI agents are a rage right now! Look at further reading section to read more.

Another way of looking at this evolution would be from the perspective of how to best give prompts to LLMs and how complex that can become as the solutions to the industry use cases mature. Ignoring the companies mentioned on this graph, here’s how the complexity rises for prompts with different use cases:

Why should a PM who is writing a Gen AI PRD care?

The reason why I spent 2/3rds of this post talking through the type of use cases and how LLMs are used in production today is because of these three factors:

(a) influence the timeline of your feature,

(b) influence the accuracy of your solution, and

(c) influence the cost of building/using that feature too.

As a PM getting the requirements right for their team should at least keep a few important factors in mind:

Accuracy. You don’t always aim to use a trained model. Some tasks might be done using just the right prompt engineering with context. Your goal should be to define and achieve a certain accuracy for your solution. Defining that will help the team pick up the appropriate solution.

Human in the loop? You don’t always have to build an “autonomous agent” if you want to automate workflows, etc. because not always automation will be the best path. Automating everything might create hallucinated outputs and if accuracy is your prime concern, you might want to build a workflow that involves a human in the loop.

Cost: Tools & Human Resources. Another important parameter to consider is the cost of the solution. What type of complexity are you aiming for? Do you need a specialized data source or not? Who and how often can they trigger the use of LLMs? Are you using the latest foundational model or is an older model good enough? Or, just fine-tuning an open-source base model should be good enough? Does your company have the bandwidth to invest in the underlying platform work that’s required to support the Gen AI feature? You might need a new type of data store, a few new toolings for the Engineering team, etc. All of this will cause other teams to

Data security is another important factor to be considered. You might have a very basic task at hand: let’s say you want to create a chatbot on the data that your customers have for them to query. But, you might be storing sensitive data, and exposing that data to an LLM might breach your contract with your customer.

Market timing matters too. In the long run, you might want to build a feature that requires a fine-tuned model. But in the short run, you might want to launch a simple RAG pipeline, just because you want your product’s presence to be felt in the market.

So, in essence, we need to ensure that you are hedging your solution across these axes and are defining the requirements for the right solution for your use case, industry, and company.

In the next substack, we will discuss, how can you as a PM do the pre-work, plan the right requirements, and ensure that you write a very well-defined PRD that can help build the right AI feature that your company should be building.

Further reading

A16Z’s note on emerging architectures of LLM applications.

Mathew Harris’ engineering perspective on building an LLM solution and key considerations to keep in mind.

Activant Capital’s Technical Guide for AI Agents ecosystem.