Data Engineering for PMs: A Must-Do Crash Course PART 2

In Part 1 of the Data Engineering for PMs series, we discussed the history of Data Engineering. In this part, we are looking at some important concepts that you must know, and why you should care as a Data PM.

WARNING: this is a long substack, rightfully so. Want to get fluent with Data Engineers? Must read till the end. Skimming is injurious to credibility. :P

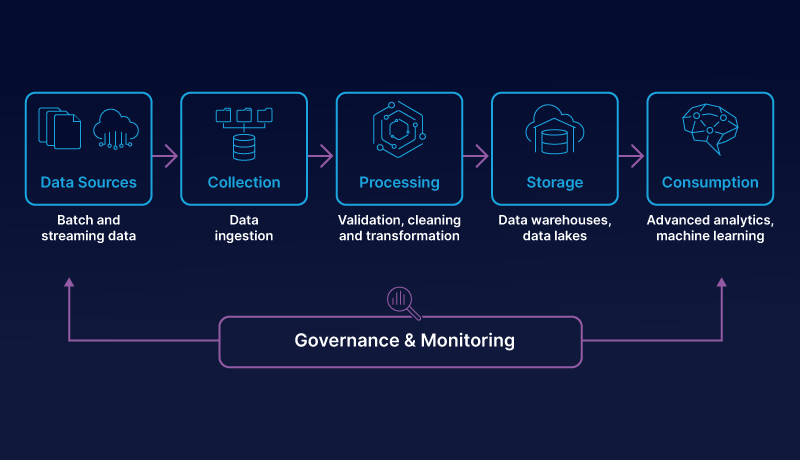

A typical Data Engineering workflow looks like the one below — connecting to data sources, ingesting data, processing data, storing data, and getting it ready for consumption while monitoring and governing it.

Now, let’s explore what each of those terms means, what kind of tools exist for it, and why should you care as a Data PM while defining data requirements.

1. Data Pipeline

A data pipeline orchestrates the flow of data from various sources to destinations, streamlining data ingestion and processing with the goal of enabling timely insights/ data delivery. A data pipeline represents the end-to-end process. Here’s an example:

You can have many data pipelines running in an org that orchestrate different data workflows, and it only gets complex as data sources grow and more data destinations are needed.

Why care as a PM?

1. Understanding what data pipelines exist with your data engineer can either reduce your eng cycles or make you understand the exact complexity of it.

2. Understnading how your data pipelines are setup can also help in prioritizing your product solutions. If you are using Engines such as Apache Spark, you can easily spin up an ML workflow.A good exercise would to create a map like the above diagram to list out all the sources, where all it stores, and how the data moves into the final destination, and how can that be used for application/analytical work.

2. ETL (Extract, Transform, Load):

ETL, or even ELT refers to data extraction, transformation, and loading processes, ensuring data consistency and reliability as a part of their data pipeline architecture. Choosing the right tool for conducting ETL can help in ensuring data pipelines across multiple sources and destinations can be set up with ease.

An important aspect of ETL processes is to know whether you are working on Batch Processing vs. Real-time Processing i.e. is data flowing in “into batches” or “real-time.” Kafka streams are the most common way of getting data in real-time.

Further, there is Reverse ETL which means, putting data from analytical warehouses back to applications such as Salesforce, Gainsight, etc.

ETL tools you will hear about are Apache Airflow, Fivetran, dbt, Apache Spark, Flink, Rudderstack, Airbyte, etc. which facilitate data movement in, and out of the data stack.

Why care as a PM?

1. ETL is an important part of a data pipeline infrastructure, because that defines what data will come in and how you can transform that data for various use cases.

2. Whether your team does any transformations or not, can change requirements for your data product’s capabiltiies or pre-processing work for your machine learning data.

A good way of understanding ETL is to probably draw tables before (at source) and maybe glance over a python script / design doc for transformations, and then look at table/data (at destination).

3. Databases / Data Warehouse / Data Lake:

All of these terms refer to some sort of data storage technique that helps store data for different use cases. Databases are more “application use case friendly” storage solutions that help with fast queries for application needs. Databases can be structured (PostgreSQL) or semi-structured (MongoDB). Some new ones are DuckDB, Pinecone, etc.

Data Lakes are the perfect dumping grounds for all types of data: structured, semi-structured, or unstructured, and are mostly friendly for data science workflows, where you might need an image dump, next to a structured table to create a model. Eg: Databricks, Amazon & Azure Data Lakes, etc.

Data warehouses cater to analytical use cases that involve structured and semi-structured data otherwise stored across multiple databases i.e. doing EDA across all departments. Eg: Snowflake, Amazon Redshift, Google BigQuery, etc.

Data Lakes and Data Warehouses only become a need for an org when orgs start to spin up multiple databases for different use cases. Here’s a quick comparison:

Why care as a PM?

1. What tools you use for storing data changes what you can do with your data. For example, an organization that has implemented a data lake across all the data, can easily spin up new ML data products faster.As a PM, check how the teams are storing data, what kind of data access does the lake/warehouse have if they are being used, and prioritize solutions accordingly.

4. Data Modelling

Data Modelling is physically, logically, and conceptually how data is organized in an organization that helps in fulfilling various use cases. Typically they are represented using a diagram like this below:

Modeling the data correctly can help with reducing data errors, and improving consistency, as well as database/data warehouse performance. Some notable models are Star Schema, Snowflake Schema (represented above), Data Vault Schema, and One Big Table.

Why care as a PM?

The type of Data Model will be helpful in understanding if you can build analytical products or will you need to transform data into another model to make scalable, performant queries.

4. Data Governance & Data Security

Data governance is the practice of organizing and implementing policies, procedures, and standards that help in making data accessible, secure, and usable across the entire lifecycle of data. Data Security refers to the act of protecting the information from theft, corruption, and unauthorized access, as well as adhering to the legal systems that dictate data security best practices. To achieve data governance and security, companies use “metadata” based policies.

Metadata refers to “data” about data that explain different facets of data assets such as who owns it, when was it last modified, and what a “column” may mean, or whether it’s Personally Identifiable Information (PII) or not.

Once data systems grow, it becomes difficult to be on how all systems work with each other, get access to data, or understand what a column may mean, without having the right tool for it, often called a “Data Catalog”. A data catalog uses metadata to create a glossary of all data assets, monitor access, help define policies, as well as see data lineages or workflows across various data tools, by stitching together metadata.

Tools you will hear about are DataHub, Amudsen, Collibra, Alation, and Atlan!

Why care as a PM?

Data Catalogs are helpful for a PM to understand what data exists, who has access to what. It helps in understanding your data and deciding what can you do with it.

Now, you might not have data catalog always implemeneted. In that case, ask for repos/internal tools that hosts all data assets, or documentation around it.

5. Data Quality & Observability

Data Quality refers to a set of conditions that explain how good data is. As a Data PM, you can always infer the quality of the dataset that you are using, or a capability that you are building, if it adheres to the six dimensions mentioned below:

The higher the quality i.e. the more accurate, complete, consistent, timely (recent), unique (easily identifiable), and valid a dataset, the better it is to make business decisions.

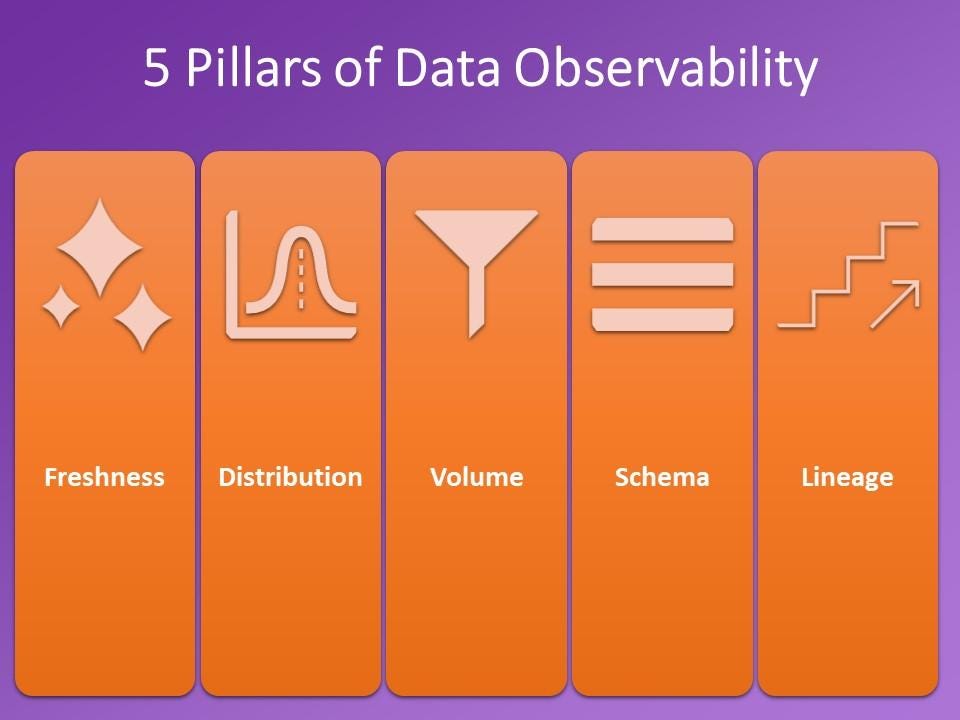

Data Observability, on the other hand, is building a system that implements “Data Quality” best practices. The goal of data observability is to identify, troubleshoot, and use tools and practices to tackle issues that affect data quality and data pipelines.

Tools for Data Observability: Monte Carlo, Databand, Metaplane, Telmai, Great Expectations.

Why care as a PM?

Data Quality requirements should always be a part of your non-functional requirements.As a PM, as you build a data system, have observability in place, otherwise you mgiht drown in bugs/data inefficiency.

💐 [Bonus] Further reading in Data Engineering

Know about the following if you are REALLY curious.

1. Data Mesh

Simply put, Data Mesh refers to a way of architecting your Data Pipelines in a “decentralized way” so that it follows a “micro-service” approach to data than a “monolithic approach.” Whaaaat? Each workflow has its own ETL system working that’s useful for that workflow i.e. ML ETL can be very different from EDA ETL. Organizations with complex data systems that need a performant data architecture, will probably go for a Data Mesh. Read more here.

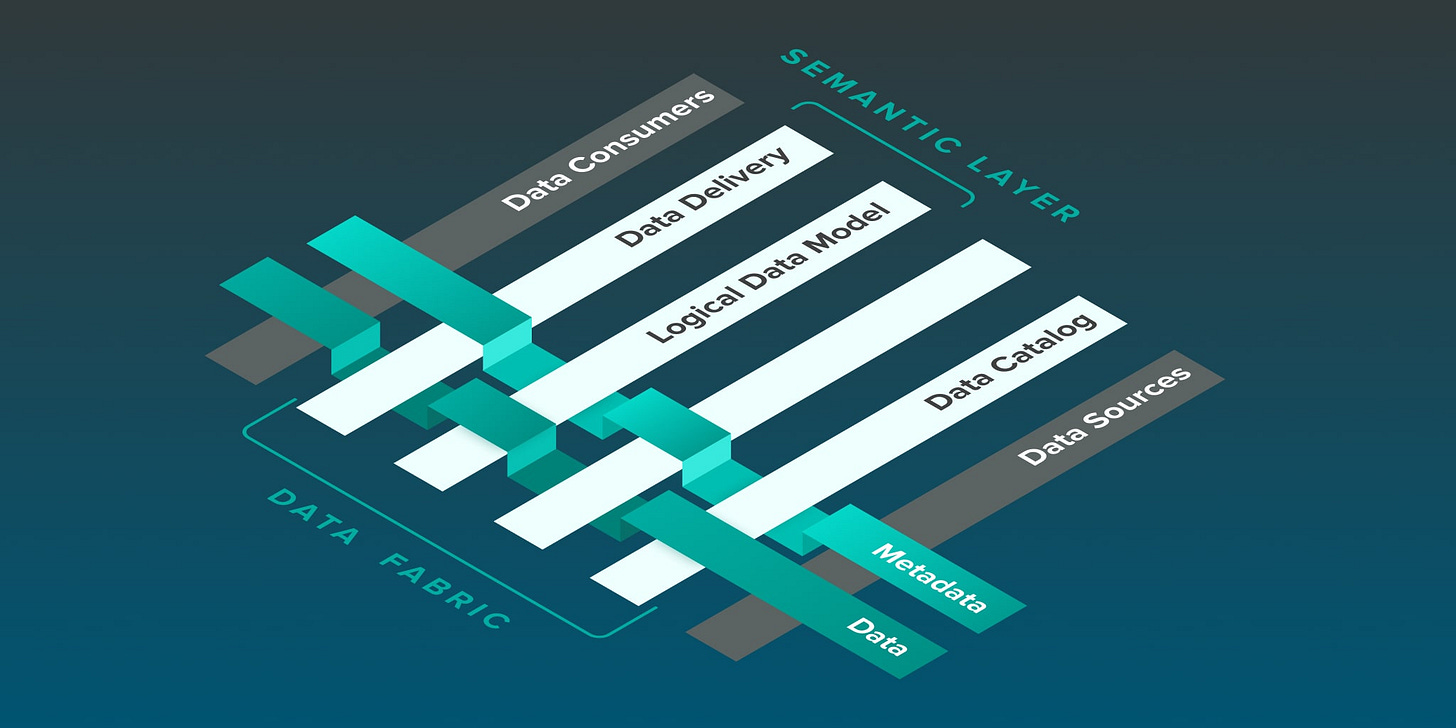

2. Data Fabric

A metadata-driven design pattern of organizing data that’s platform/tool agnostic but helps with consistency and reliability of working with data across systems, tools, etc., and helps with governance and security of data. As this article says, “The core idea behind a data fabric is to mimic weaving various data resources into a fabric that holds all of them together.” Basically, you know your company has a good system of defining meta-data and you can use that well across systems to build new workflows.

Here’s a practical comparison between the two approaches.

3. The State of Data Engineering 2023

The field of managing data is expanding every year. Here’s a look at the vendors across different functions as put together by LakeFS in this report below:

Other LATEST reports to look at: the Airbyte’s State of Data 2023 and the Seattle Data Guy’s State of Data Engineering Part 1 & Part 2.

Hope you enjoyed getting up to speed with all things Data Engineering, and how you can use these concepts while thinking through data products in your job.

Signing off,

Richa,

Your Chief Data Obssessor, The Data PM Gazette.